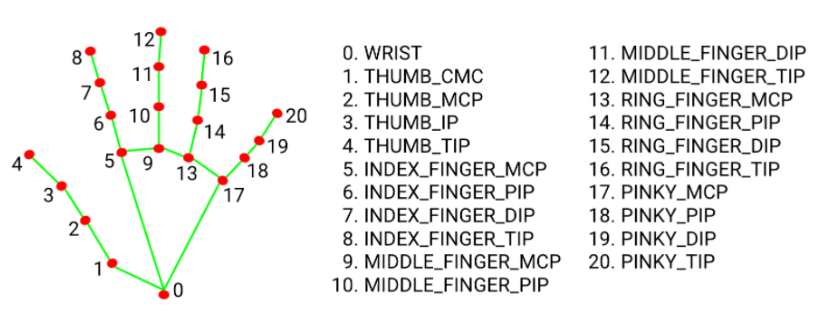

As I was progressing with my research and looking at the current industry leaders in eye tracking technologies such as Tobii and EyeGaze I came to the realisation that as of recently they have started to touch on the subject of accessibility in video games and my idea for my project has been recently touched on by Tobii’s latest release HERE. Because of this I had started to look at different ways to offer accessibility through OpenCV. In my OpenCV research I came across a hand/finger tracker through a model named MediaPipe.

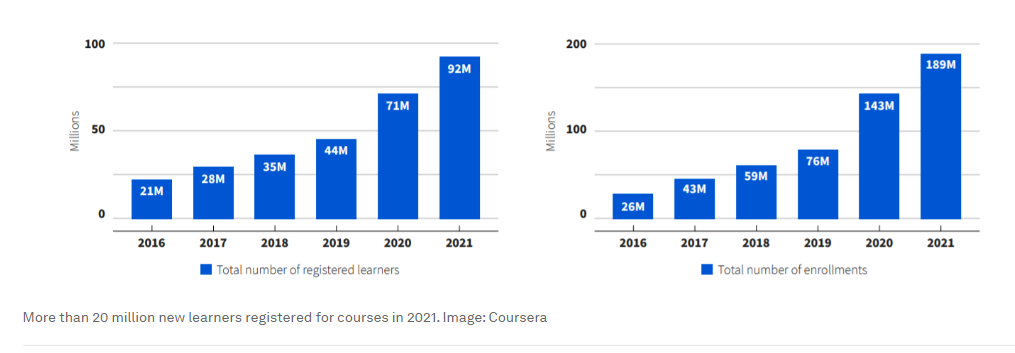

As stated in the World Economic Forum and the Harvard Business Review site online learning is still on the rise ever since Covid and more and more even today are enrolling themselves in online courses both at University as well as well after they have graduated in an attempt to acquire more skills for potential work opportunities.

While these courses offer a lot of information and some minimal interactivity they mostly consist of lecturers or tutors going through a presentation that they have preprepared and lack the interactivity between teachers and students that one would see if they were to take a physical class instead, making it harder for both teacher and student to ask questions and receive instant feedback. As schools have started to become more comfortable with the concept of remote learning students seem to voice problems regarding their ability to focus – Times Higher Education. Whiteboards in class are used to maintain that student attention eliminating distractions and giving individuals something to focus on, while also giving the teachers the ability to create a more engaging and creative class where they can interact with their students – i3 Technologies

Project Direction

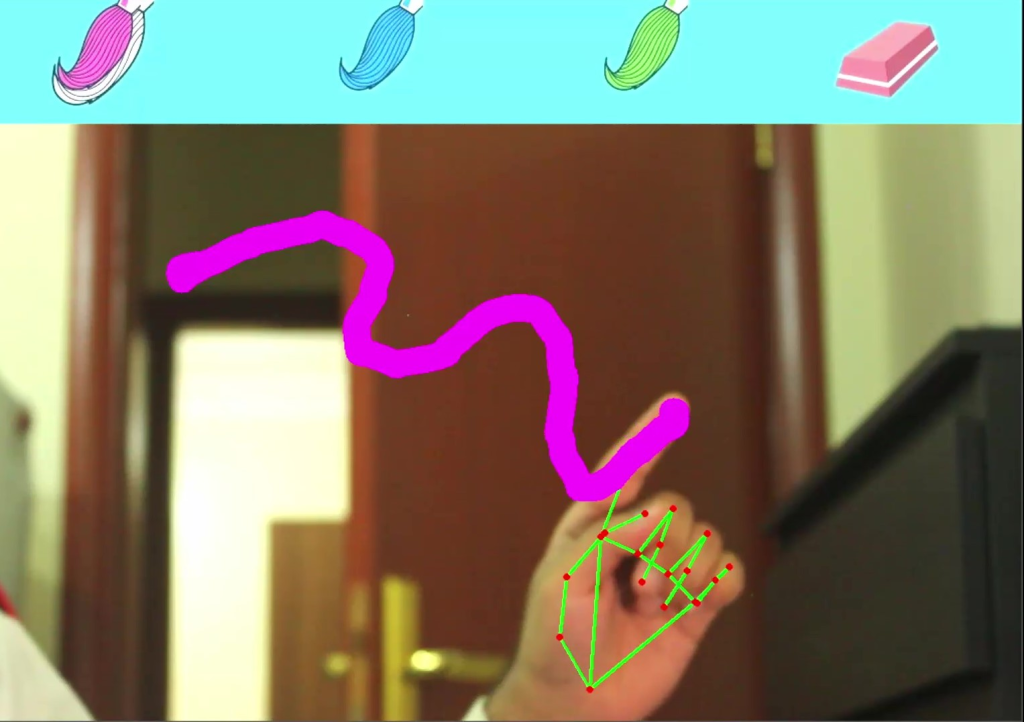

As of discovering this I have decided to move my project direction to focus more on an OpenCV based class room where tutors have the ability to create engaging and interactive classes by turning their desktop into a digital whiteboard that they can control using hand gestures and their webcam, while students can pinpoint at specific location to ask question and receive instant feedback just like raising your hand in a physical class.

People seem to have already taken advantage of the MediaPipe hand recognition to create very basic drawing application, but I am planning on taking it a step further allowing the user to paint and control their desktop directly, adding mouse integration and the ability to screen record the desktop being painted on live to allow for this live interaction with the students. Ideally my application would allow the viewers to interact with this in the same way, when given permissions by the teacher.